In recent months, it seems like all we are hearing about is the next big thing in artificial intelligence. To a degree, that’s true, the past year has seen an explosion publicly in the number of AI solutions available for the regular citizen to consume.

As with most new things in the tech sector, this comes with security risks, but not the usual risks you might be thinking of. I’m talking about the ability for hackers to manipulate AI and inject data to produce potentially hazardous results.

Only recently, researchers managed to fool a self-driving cars autonomous system into thinking a stop sign was something else. Can you guess what from the image above? No me neither, but their research showed that adding the above markings to a stop sign, made the car think it was a 45mph speed limit sign.

The concern among security professionals such as the head of the National Cyber Security Centre in the UK and the former head of signals intelligence agency GCHQ is that while companies are fighting to claim top dog in the AI space, security is getting left behind.

A small group of experts for years have worked on adversarial machine learning, which looks at how AI and machine learning systems can be tricked into giving bad results.

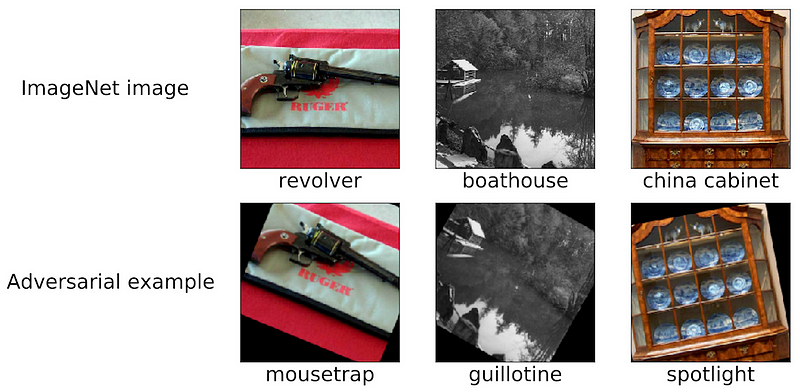

Examples of adversarial

It doesn’t stop at the manipulation of street signs though, another field that is currently exploited involved the poisoning of data which the AI is learning from. This is a major problem for AI systems, it’s hard to spot these, and usually you can only spot this attack after the fact with some forensic analysis.

On the left, you can see a picture of a pig that a cutting-edge convolutional neural network accurately identified as one. The image has been minimally altered (every pixel is in the range [0, 1] and changed by no more than 0.005), and the network now confidently returns the class “airliner” for the image.

Such attacks on trained classifiers have been studied since at least 2004 (link), and there has already been work on adversarial examples for image classification in 2006 (link).

Here you can see another example, how image manipulation by rotation of the image can produce unexpected results.

Imagine if AI was used to spot weapons, and this simple manipulation could mean the system misses something.

So what is the concern?

The threat to greater national security extends beyond disruption-seeking hackers.

A malevolent attacker could figure out how to either miss the genuine tanks or notice a number of phoney tanks if AI was employed to examine satellite imagery in search of a military build-up.

Prior to now, these worries were purely theoretical, but there are now indications that systems are actually being attacked.

Summary

As producers of AI enabled systems, we need the same level of caution we have when developing other things. The danger is that AI is moving at such a pace that security professionals are unable to keep up with understanding the attack vectors for these systems.

That is a dangerous position for the industry to be in, and more than anything else, has the potential to undermine the whole developement of AI.